Secure Destruction: File Shredding versus Delete and Wiping Free Space the Right Way

Simple file deletion is a fundamental security failure. When a user sends a file to the Recycle Bin and empties it, the action only removes the file’s reference marker from the Master File Table (MFT) or similar file system index. The actual data, the physical magnetic or electrical imprint, remains on the disk, hidden in what the operating system considers unallocated space.

Software file shredding, however, permanently overwrites specific file data before deletion, making recovery impossible. For traditional Hard Disk Drives (HDDs), this is the definitive defense against recovery tools. Yet, this entire methodology fails on modern Solid State Drives (SSDs).

To truly destroy sensitive data remnants left by past standard deletions, users must employ secure tools like Microsoft’s SDelete to overwrite all unallocated free space on HDDs. For SSDs and NVMe drives, hardware-level commands, such as ATA Secure Erase, are the only verifiable solutions.

1. The Deletion Deception: Why “Emptying the Trash” Fails

1.1 The Illusion of Deletion

The term “delete” is fundamentally misleading in the context of digital security. When data is deleted manually, by removing it from the recycling bin or trash folder, the operating system (OS) performs a swift, logical operation. It simply updates the file system index, marking the occupied sectors as “available” for future use. The system signals that these blocks can now be overwritten by new content, but it does not mandate immediate erasure.

The underlying data blocks themselves remain physically intact on the storage device. This is not a security flaw; it is a performance feature. The quick deletion process saves time and system resources, but it introduces the significant vulnerability known as data remanence.

1.2 Data Remanence and Forensic Recovery

Data remanence refers to the measurable physical representation of information remaining after an attempt has been made to erase or remove it. This residual data is concentrated in the unallocated space, the portion of the disk that is not currently assigned to any active files.

Digital forensics practitioners routinely exploit data remanence. Specialized tools, such as Recuva or Wondershare Recoverit, scan the raw sectors of the unallocated space for file signatures, headers, and metadata fragments. These programs can often successfully bypass the broken file system pointer and reconstruct entire documents, proprietary logs, and sensitive personal information, proving that the original data was never destroyed. This technique is so effective that it can often recover data even from drives that have been quick-formatted.

1.3 Key Definition: Shredding versus Wiping

While the terms are often used interchangeably, distinguishing between file shredding and free space wiping clarifies the security process:

- File Shredding (Secure Deletion): This targets specific active files or folders. The software preemptively overwrites the file’s existing data blocks with pseudorandom characters or specific patterns multiple times before instructing the OS to delete the file system pointer. This destroys the data at its source and prevents recovery from that specific file’s location.

- Free Space Wiping (Sanitization): This targets the entire unallocated space on a drive, filling it completely with overwriting patterns. This is performed to eliminate the remnants of all files that were previously deleted using the insecure standard deletion method. This step is crucial for cleaning up the digital debris left behind by historical use.

2. Data Destruction Standards: The Best Way to Overwrite Data (HDD Focus)

Secure overwriting methods use specific sequences of ones, zeros, and random bits to physically scramble the data signature on magnetic media (HDDs). The efficacy of these methods hinges on the number of passes and the complexity of the pattern used.

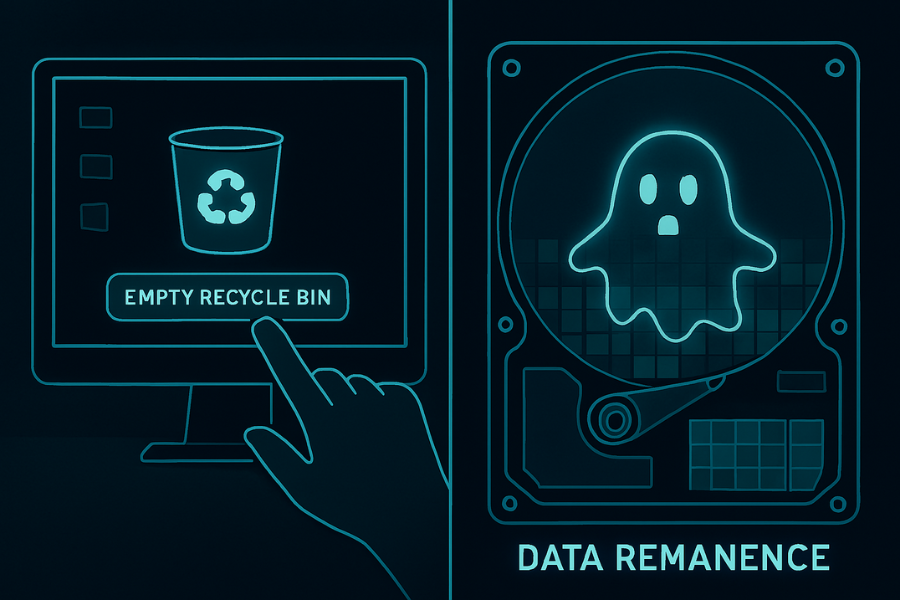

2.1 The Philosophy of Multiple Passes

The need for multiple overwrite passes originated from older magnetic hard drive technology that used less precise recording methods (like MFM or RLL encoding). Experts hypothesized that residual magnetic fields (ghost data) could remain, potentially allowing sophisticated laboratory equipment (such as Magnetic Force Microscopy) to detect traces of the original data beneath a single layer of overwriting.

For decades, this led to the adoption of multi-pass standards that employed specific character sets to neutralize any residual magnetism.

2.2 Standard Comparison: DoD versus Gutmann

The industry primarily recognizes a few robust standards:

- DoD 5220.22-M: This standard, established by the U.S. Department of Defense, is the benchmark for secure destruction. It typically involves three or seven overwrite passes, often using a specific sequence of a character (like a zero), its complement (a one), and a final pass of random data, followed by verification. The DoD method is widely regarded as a credible and efficient industry and government standard.

- The Gutmann Method: Developed in 1996 by Peter Gutmann, this is perhaps the most extreme standard, involving 35 specific and complex overwrite passes. The Gutmann sequence was designed to counter every known encoding scheme used by older hard drives.

The extreme nature of the Gutmann method, however, renders it impractical for modern use. While exceptionally secure, running 35 passes on a modern, high-capacity hard drive is prohibitively slow, potentially taking weeks to complete a 1TB disk. Analysts confirm that due to the increased areal density and precision of modern hard drive read/write heads, the magnetic signal is overwritten so thoroughly that a high-quality single pass of random data or a robust 3-pass DoD routine is almost always sufficient to prevent recovery. Therefore, recommending Gutmann today incurs a massive performance cost without a measurable security gain over the DoD standard.

Table 2.2: Comparison of Secure Erase Standards for HDDs

| Method Name | Passes | Primary Pattern | Security Context | Speed (Relative) |

| Write Zero (Single Pass) | 1 | Zeros (0x00) | Sufficient for most modern HDDs | Very Fast |

| DoD 5220.22-M (3-Pass) | 3 | Zero, One, Random (with verification) | Credible Government/Corporate Compliance | Moderate |

| Gutmann Method | 35 | 35 complex patterns | Extreme, Legacy (Impractical for daily use) | Extremely Slow |

| Random Data | 1 to 3+ | Pseudo-random bytes | Strong security, highly efficient on modern disks | Fast to Moderate |

The data destruction landscape changed entirely with the widespread adoption of Solid State Drives and NVMe technology. The architecture of flash memory storage fundamentally breaks the assumption that software overwriting works.

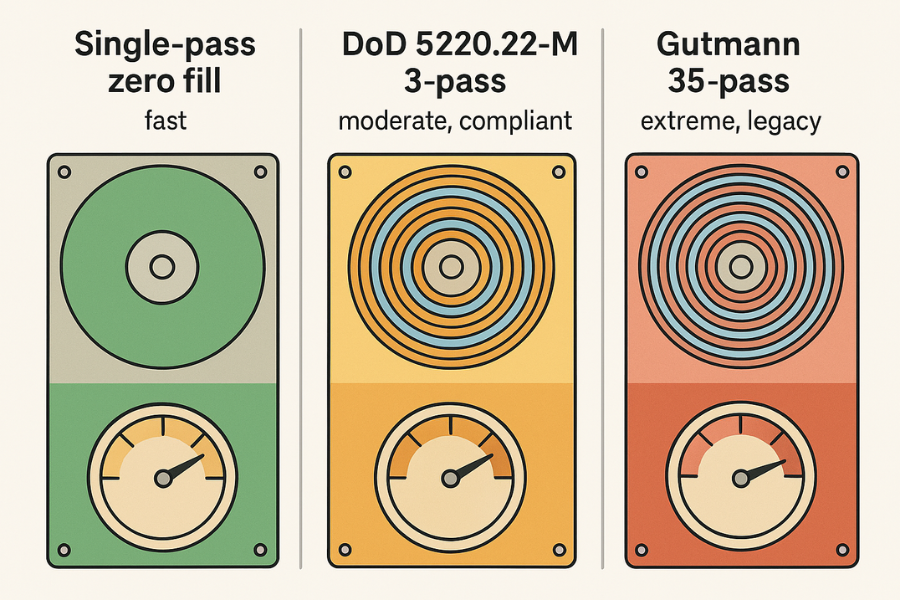

3.1 The FTL and Wear-Leveling Problem

SSDs and NVMe devices rely on a sophisticated internal controller, specifically the Flash Translation Layer (FTL), to manage data movement and prolong the drive’s lifespan through wear leveling. Flash memory cells have a finite number of write cycles. Wear leveling ensures that write operations are distributed evenly across all physical blocks, preventing any single cell from prematurely failing.

When the OS deletes a file, it sends a TRIM command to the SSD controller, informing it that the associated data blocks are no longer needed and can be internally recycled during the garbage collection process. However, this cleanup is handled asynchronously by the drive’s firmware.

3.2 Why Software Shredding Fails on SSDs

The wear-leveling mechanism prevents software overwriting utilities from verifying physical destruction. When a shredding tool attempts to overwrite a specific address on an SSD, the FTL intercepts this command. Instead of physically destroying the old data at its current location (which would accelerate wear), the FTL writes the new overwrite pattern (e.g., zeros) to a fresh block elsewhere on the NAND flash chips.

The original, sensitive data remains intact on the physical chip, marked as “stale” or “garbage” by the FTL. While the drive’s internal garbage collection will eventually erase that block, the process is asynchronous and cannot be guaranteed or monitored by the user. Therefore, relying on software shredders like SDelete or Eraser for file-specific security on an SSD is largely futile and provides a false sense of security.

3.3 The Definitive SSD Solutions

Because the destruction process must occur at the firmware level, SSD sanitization requires specialized approaches:

- ATA Secure Erase / Sanitize: This is the preferred method for non-encrypted SSDs. This command set is embedded in the drive’s firmware. When executed (usually via a utility run in a bootable environment), it instructs the SSD controller to internally erase all memory cells, returning the drive to a factory-raw state. It is fast and comprehensive.

- Cryptographic Erase (CE): For Self-Encrypting Drives (SEDs), all data is continuously encrypted by hardware before it is written to the flash memory. Cryptographic Erase involves instantaneously destroying or changing the internal media encryption key. This renders all existing encrypted data indecipherable and unrecoverable, regardless of whether the original blocks have been physically wiped or not.

- Physical Destruction: When dealing with highly sensitive data or when regulatory compliance (such as GDPR or HIPAA) mandates absolute verification, physical destruction is the only foolproof method. This involves crushing or shredding the media into tiny particles, usually 4mm or smaller, to ensure that the individual memory chips are destroyed and data recovery becomes physically impossible.

4. Windows Tutorial: Wiping Free Space via Command Line (SDelete)

For users operating on traditional HDDs, securely wiping the free space is essential to clean up remnants of all previously standard-deleted files. Microsoft’s Sysinternals utility, SDelete (Secure Delete), is the official command-line tool for this purpose.

4.1 Key Outcome and Prerequisites

- Outcome: All unallocated blocks on the target HDD are overwritten using secure methods, destroying previously deleted file remnants.

- Prerequisites: Download SDelete (

Sdelete.zip) from Microsoft Sysinternals. The process requires running Command Prompt (CMD) with Administrator privileges.

4.2 Step 1: Download and Prepare SDelete

- Action: Download the SDelete utility from the official Microsoft site.

- Action: Extract the

sdelete.exefile. - Action: Place the executable in an easily accessible location, such as a new directory named

C:\Tools\SDelete.

4.3 Step 2: Open Elevated Command Prompt

- Action: Open the Windows Start menu.

- Action: Type “CMD.”

- Action: Right-click “Command Prompt” and select “Run as administrator” to ensure the necessary permissions are granted for system-level disk operations.

- Action: Navigate to the SDelete directory:

cd C:\Tools\SDelete

4.4 Step 3: Execute Secure Free Space Wipe

SDelete provides two primary options for cleaning free space: zero-fill and secure overwrite.

- Fastest Single-Pass Wipe (Zero-Fill): This is generally considered adequate for modern HDDs when speed is a factor.

- Command:

sdelete -z C:

- Command:

- DoD 7-Pass Secure Wipe (Default): This executes the default multi-pass secure cleaning standard on the unallocated space.

- Command:

sdelete -c C:

- Command:

The -c flag instructs SDelete to clean the free space. The utility accomplishes this by creating a massive temporary file that consumes all available unallocated space, overwrites it with the chosen pattern (defaulting to the DoD standard), and then deletes the temporary file. This intensive I/O process can take several hours depending on the amount of free space being cleaned.

5. Windows Tutorial: Secure File Shredding with Eraser (GUI)

For users who prefer a graphical interface for managing secure destruction tasks, Eraser is a well-designed, free, open-source utility for Windows that supports advanced scheduling and robust erasure methods.

5.1 Key Outcome and Prerequisites

- Outcome: Specific files or folders are immediately and permanently shredded using a configured multi-pass algorithm, such as the DoD 3-pass standard.

- Prerequisites: Install the Eraser software (supports recent versions of Windows 10 and 11).

5.2 Step 1: Configure Default Erasure Method

Consistency in security requires standardizing the destruction method:

- Action: Open the Eraser application.

- Action: Navigate to Settings.

- Action: Locate the ‘Default file erasure method’ drop-down menu.

- Action: Select a robust, widely accepted standard such as “US DoD 5220.22-M (8-306./E) (3 passes)”. This balances high security with acceptable speed.

- Action: Click “Save Settings.”

5.3 Step 2: Targeted File Shredding via Context Menu

Eraser integrates directly into the Windows File Explorer, allowing immediate shredding of active files:

- Action: Navigate to the sensitive file or folder within File Explorer.

- Action: Right-click the file.

- Action: Hover the cursor over the Eraser icon in the context menu that appears.

- Action: Select Erase from the secondary menu.

- Action: Confirm the subsequent security prompt. The selected file is immediately processed through the chosen multi-pass overwrite method and then securely removed.

5.4 Step 3: Wiping Unused Disk Space (GUI Method)

Eraser can also handle the free space wiping task, offering a GUI alternative to SDelete:

- Action: Open Eraser.

- Action: Click the down arrow located next to the “Erase Schedule” button.

- Action: Select New Task.

- Action: In the Task Properties window, set “Task Type” to Run immediately.

- Action: Click Add Data.

- Action: Set “Target Type” to Unused space on drive.

- Action: Select the desired drive letter (e.g., D: or C:).

- Action: Click OK. The task will begin filling and overwriting the unallocated space using the default erasure method configured in Step 1.

6. Troubleshooting Common Secure Wiping Errors

Secure wiping tools interact directly with the deepest layers of the operating system and file structure, leading to specific errors that often require technical command-line diagnosis.

6.1 SDelete Temp File Creation Failure

When SDelete runs a free space wipe (-c), it must create a temporary file large enough to occupy all free space on the drive. If the application cannot successfully create this file, the operation halts, often resulting in an error message indicating a failure to create the cleanup file.

The root cause of this failure is often that the %TEMP% environment variable, which dictates where temporary system files should be created, points to a folder that no longer exists or is inaccessible.

- Solution: The resolution involves diagnosing the currently set path and recreating the missing directory. In an elevated Command Prompt, the user can type SET TEMP to view the full path of the temporary directory. If the displayed path (e.g., C:\Users\Username\AppData\Local\Temp\1) is incorrect or the final folder does not exist, the user must manually recreate the missing directory. Once the path is valid, SDelete runs successfully.

6.2 Troubleshooting Table: Symptom to Fix

Table 6.1: Secure Wiping Symptom to Fix

| Symptom / Error Message | Root Cause | Solution / Fix |

| SDelete: Could not create free-space cleanup file | The system’s %TEMP% variable points to a non-existent or inaccessible temporary directory. | In CMD, use SET TEMP to view the path, and manually recreate the missing directory structure. |

| Eraser Task reports “Failed” or “Incomplete” | Antivirus or other background security software is locking the file handles, preventing the low-level overwriting. | Temporarily disable all antivirus and security utilities, then restart the Eraser task. |

| System slows dramatically during wipe | The operation requires 100% disk I/O and processor resources for generating and writing data. | Schedule large free space wipes to run overnight. For SDelete, reduce passes using the -p parameter. |

| Data remains recoverable after shredding (SSD ONLY) | Software failed to destroy the data due to the FTL diverting the write commands. | Do not rely on software shredding for SSDs. Use hardware-based solutions (ATA Secure Erase or Physical Destruction). |

7. Integrating Destruction into a Secure Data Lifecycle

File destruction should never be viewed as an afterthought but as an integrated component of a robust data lifecycle. Whenever sensitive data is created, it must be protected by encryption, and when its unencrypted copy is no longer needed, it must be securely destroyed.

7.1 Contextualizing Encryption Cleanup

Encryption software, such as Folder Lock, which utilizes AES-256 to create military-strength digital vaults, often includes integrated shredding features. This integration is critical because after a user encrypts a document, the original, unencrypted source file remains on the disk. If this original copy is only standard-deleted, it can still be recovered.

The integrated shred function, therefore, automates the essential cleanup step: immediately and permanently destroying the unencrypted source file after the encrypted copy is safely created and stored. This confirms that the only accessible version of the sensitive data is the ciphertext protected within the container.

7.2 Hardening the Cryptographic Key (VeraCrypt PIM)

For maximum data security, the integrity of the encryption key is as vital as the destruction of the original data. If a user employs full-disk or file-container encryption using tools like VeraCrypt, the key derivation function (KDF) protects the system against brute-force attacks.

KDFs transform the user’s password into the key used to decrypt the volume header, adding computational steps (iterations) to slow down cracking attempts. The longer this process takes, the greater the difficulty for an attacker trying to guess the password, even if they use massive GPU arrays.

The Role of PIM

VeraCrypt introduced the Personal Iterations Multiplier (PIM) to allow users to manually control this work factor. A higher PIM value mandates more internal hashing computations.

For non-system encryption (file containers) using the widely compatible PBKDF2-HMAC, the number of iterations is calculated as:

Number of Iterations = 1000 + (PIM x 1000)

Before the PIM feature, VeraCrypt containers defaulted to 500,000 iterations for PBKDF2-HMAC/SHA-512. To maintain this high security standard against modern cracking speeds, the PIM must be manually set to 485. Using default, lower settings leaves the encryption vulnerable to rapid brute-force attacks, especially if the password is shorter than the recommended 14 to 16 characters.

A higher PIM significantly increases the computational barrier, ensuring that even if an attacker gains physical possession of the drive, the complexity of cracking the password ensures data confidentiality for decades.

Table 7.2: Data Protection Strategy: Scope and Resilience

| Action | Scope | Primary Threat Mitigated | Persistence Risk | Hardware Dependency |

| Standard File Delete | File Pointer/Index | None (Casual Access) | High (Forensic Recovery) | N/A |

| Secure File Shredding (Software) | Specific File Blocks | File Recovery Software | Medium (Ineffective on SSD/NVMe) | HDD Only |

| Wiping Free Space (SDelete) | Unallocated Blocks | Recovering Deleted Fragments | Low (Ineffective on SSD/NVMe) | HDD Only |

| ATA Secure Erase (Firmware) | Entire Drive | Physical Recovery, Data Remanence | None (Full Sanitization) | SSD/NVMe Only |

| Full Disk Encryption (VeraCrypt) | All Data (At Rest) | Unauthorized Access/Theft | None (Without Key/Password) | N/A |

8. Proof of Work and Technical Verification

Effective data sanitization requires precise settings and confirmation steps. Ignoring configuration details, especially when pairing shredding with encryption, compromises the entire security chain.

8.1 Recommended Software Settings Snapshot

When encrypting files for transfer or storage (e.g., using 7-Zip or similar container software), the configuration must prioritize both the algorithm strength and the protection of metadata:

- Encryption Algorithm: AES-256 is the required industry standard for robust security.

- Metadata Protection: Users must ensure the “Encrypt file names” option is checked during archive creation. If file names are not encrypted, even if the contents are protected, an attacker can analyze the metadata to identify the nature of the sensitive data being protected.

8.2 Advanced Key Derivation Configuration (VeraCrypt PIM)

The selection and configuration of the Key Derivation Function (KDF) determine how well the system resists unauthorized password testing.

- KDF Selection: Argon2id is the modern, memory-hard KDF recommended for new VeraCrypt volumes. However, if using the older PBKDF2-HMAC for non-system encryption, the PIM value is critical.

Proof of Work Block: PBKDF2 PIM Target

To ensure the key derivation function reaches a standard of 500,000 iterations—a necessary threshold for resisting massive GPU cracking efforts—the user must manually specify a high PIM value.

Using the VeraCrypt formula for non-system volumes:

Number of Iterations = 1000 + (PIM x 1000)

To achieve 500,000 iterations:

500000 = 1000 + (PIM x 1000)

Solving for PIM:

PIM = (500000 – 1000) / 1000 = 499

If a user selects PBKDF2 for a file container in VeraCrypt, the PIM must be set to 499 to achieve the necessary work factor and dramatically increase the computational barrier.

8.3 Share-Safely Example

Once an essential file has been encrypted and shredded, its secure sharing relies on strict separation of data and key.

- Protocol Example: The encrypted file (report_Q3_encrypted.7z) is uploaded to a secure file-sharing service. The share link is configured to expire within 24 hours. The complex, unique password used for the AES-256 encryption must be transmitted separately—for example, via an end-to-end encrypted service like Signal, or delivered verbally over the phone. This layered approach ensures that if the link or the password is intercepted, the other element is unavailable, maintaining data confidentiality.

Frequently Asked Questions (FAQs)

Is the Gutmann method still necessary for secure deletion?

No. The Gutmann 35-pass method is largely obsolete. Modern HDDs require significantly fewer passes (often one pass of random data or a 3-pass DoD is sufficient) due to advancements in recording technology.

Does shredding a file free up space faster than deleting it?

No. Standard deletion is instantaneous because it only updates a marker. Shredding requires the system to physically read and overwrite the data blocks multiple times, making the process much slower.

Can I use SDelete or Eraser on my SSD or NVMe drive?

You should not use software shredders on SSDs. The drive’s internal FTL and TRIM commands prevent the software from guaranteeing physical data overwriting. Use the hardware-level ATA Secure Erase instead.

What is the main risk of not wiping free space?

The primary risk is data remanence. Fragments of previously deleted sensitive information remain in the unallocated space, allowing forensic recovery tools to reconstruct proprietary documents or personal data.

What is the most secure erasure method for an SSD if I plan to recycle the computer?

For enterprise or regulatory compliance, the most secure method is physical destruction (shredding or crushing the media to 4mm particles). Alternatively, use ATA Secure Erase or Cryptographic Erase.

What is the PIM value in VeraCrypt, and should I change it?

PIM stands for Personal Iterations Multiplier. It controls the complexity of the Key Derivation Function, increasing resistance to brute-force password attacks. Increasing the PIM is mandatory for robust security against modern cracking methods.

Can a hacker bypass a simple password protecting an encrypted file?

Yes. Simple or short passwords are highly vulnerable to automated brute-force and dictionary attacks. Modern systems can test millions of combinations per second, necessitating complex, 16+ character passwords to achieve security measured in years.

What is the difference between data shredding and degaussing?

Shredding is a software-based overwriting process. Degaussing is a hardware method that uses a powerful magnetic field to destroy data on magnetic media (HDDs or tapes). Degaussing is ineffective against SSDs.

If I encrypt a file using 7-Zip, do I need to shred the original?

Yes, absolutely. Encrypting a file creates a new, protected copy. The unencrypted source file remains on the disk until you securely shred the original copy.

Why did SDelete fail with a Could not create free-space cleanup file error?

The SDelete utility could not create the massive temporary file required for the wipe because the system’s %TEMP% environment variable path was broken, typically pointing to a deleted or non-existent folder.

What is the final verification required for an NVMe drive before recycling?

Physical destruction (shredding) is recommended because NVMe architecture makes verification of software or firmware-level erasure challenging across different vendors. Destruction guarantees that the memory chips cannot be accessed.

How should I securely share an encrypted file and its password?

Never send the encrypted file and the password via the same channel. Send the encrypted file via email or cloud link with an expiry date, and transmit the unique, complex password via an end-to-end encrypted channel like Signal or over the phone.

If I use full-disk encryption like BitLocker, do I still need to shred files?

Generally, no. With full-disk encryption active, all data on the drive, including deleted fragments in free space, is already stored in ciphertext. As long as the encryption key remains secure, deleted data is safe from recovery.

What standard does Linux’s built-in shred command use?

The GNU shred utility uses multiple passes of pseudo-random patterns, often concluding with a zero-fill pass to hide the shredding activity. Dedicated tools like sfill are used for cleaning free space on Linux.

Why is physical destruction often recommended for NVMe drives?

Physical destruction (shredding) is recommended because NVMe architecture makes verification of software or firmware-level erasure challenging across different vendors. Destruction guarantees that the memory chips cannot be accessed.

Conclusion

Secure file destruction is defined not by the operating system’s logical commands, but by physical verification of data erasure. Simple file deletion is a deceptive operation that leaves sensitive remnants recoverable by forensic tools.

For data stored on Hard Disk Drives (HDDs), the correct procedure involves deploying secure wiping tools like SDelete or Eraser configured to use the efficient and compliant DoD 5220.22-M standard (3-7 passes) to clean both active files and all unallocated free space.

For data stored on SSDs and NVMe drives, software-based shredding is technically ineffective due to the Flash Translation Layer and TRIM commands. Relying on these tools for secure deletion on flash memory devices creates a dangerous security gap. The only verifiable methods for SSD sanitation are hardware-level commands: ATA Secure Erase or Cryptographic Erase, or, for maximum assurance, physical media destruction.

Ultimately, secure deletion must be integrated with strong encryption. Users must shred the original, unencrypted source file immediately after generating an encrypted copy, and they must harden that encryption key using high iteration counts (such as a PIM of 499 for VeraCrypt PBKDF2 containers) to ensure the cryptographic defense resists modern brute-force techniques. Security requires meticulous attention to the lifecycle of data, from creation and encryption to its irreversible destruction.