AI Plus Encryption: Mastering Private On, Device Models and Differential Privacy

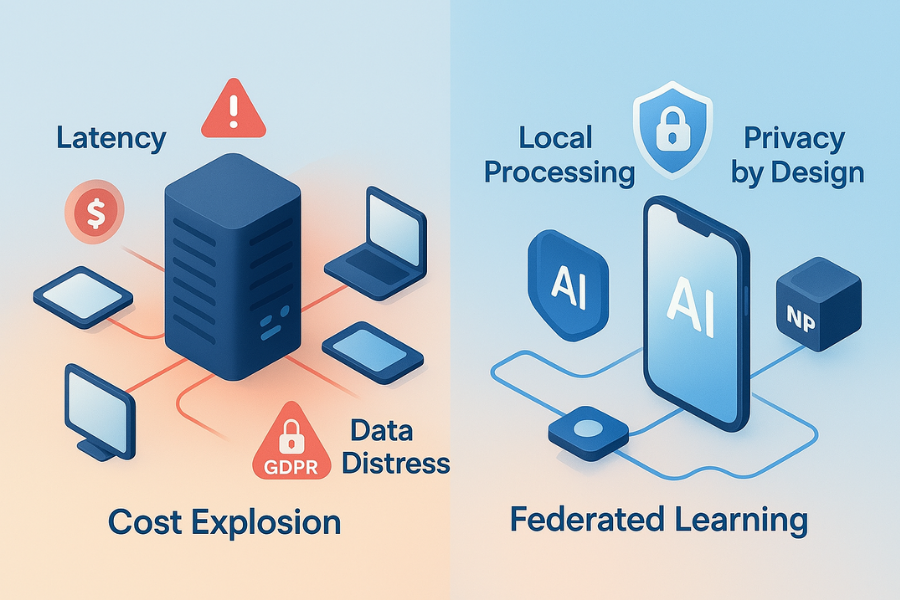

This expert overview, brought to you by Newsoftwares.net, details the essential architectural shift required for private, production, level AI. Protecting AI models requires shifting away from centralized data collection toward three architectural pillars: Federated Learning (FL) for decentralized training, Differential Privacy (DP) for statistical data protection, and Homomorphic Encryption (HE) for secure inference on sensitive inputs. The immediate answer to scalable privacy is layering DP atop FL, leveraging frameworks like Opacus and TensorFlow Privacy. This hybrid approach ensures verifiable privacy, minimizes regulatory risk, and delivers superior performance for sophisticated, on, device features, maintaining both user convenience and data security.

Protecting AI models requires shifting away from centralized data collection toward three architectural pillars: Federated Learning (FL) for decentralized training, Differential Privacy (DP) for statistical data protection, and Homomorphic Encryption (HE) for secure inference on sensitive inputs. The immediate answer to scalable privacy is layering DP atop FL, leveraging frameworks like Opacus and TensorFlow Privacy.

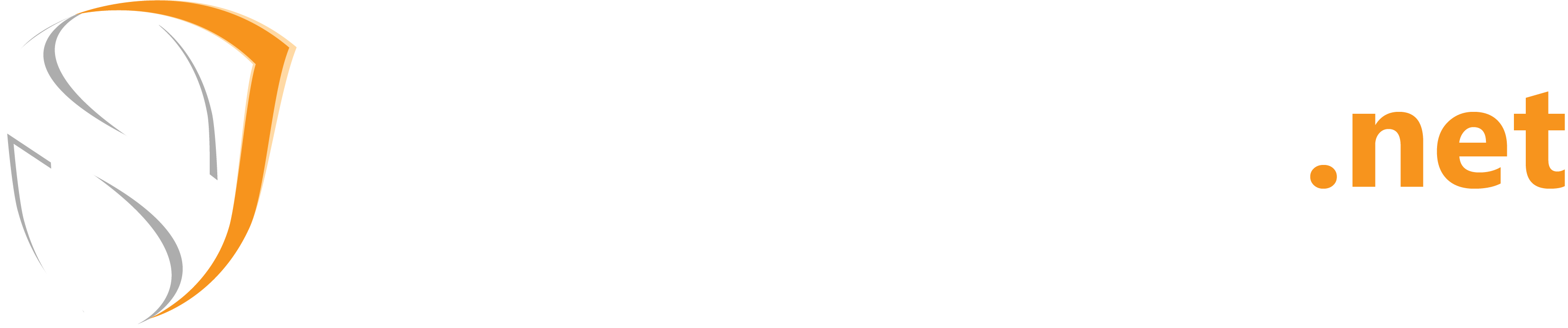

If an organization is building production, level AI today, pure centralized data models are obsolete due to escalating operational costs and rigid regulatory pressure, such as GDPR and HIPAA. The definitive solution for privacy, preserving model training is Federated Learning paired with Differential Privacy Stochastic Gradient Descent (DP-SGD). This hybrid approach allows model training across millions of user devices, keeping raw data local, while using DP to mathematically shield individual contributions from reconstruction or leakage during the aggregation of model updates.

The Privacy Engineering Gap Statement: Existing guides often describe Differential Privacy (DP) and Homomorphic Encryption (HE) separately or focus only on theoretical guarantees. This report provides an integrated, action, oriented overview that includes working technical tutorials (TensorFlow Privacy/Opacus), quantitative performance benchmarks, and a clear matrix for selecting the optimal privacy, enhancing technology (PET) based on specific AI deployment needs (on, device versus secure server, side inference).

How to Build Mathematically Private AI: Outcomes Summary

- Secure Training: Use Federated Learning (FL) combined with DP-SGD to keep raw training data local and inject calibrated Gaussian noise into aggregated model gradients.

- Private Guarantees: Select an optimal privacy budget (epsilon) to balance utility and guarantee that altering one user’s record minimally affects the statistical model output.

- Secure Inference: Deploy advanced Homomorphic Encryption (HE) or Secure Multi, Party Computation (SMPC) to allow cloud processing of highly sensitive data (like biometric inputs) without ever requiring decryption by the service provider.

Part I: The Foundation of Private AI Architecture

1. The Global Pivot to On, Device Intelligence

The practice of requiring all user data to be shipped to a central cloud for processing is increasingly unsustainable. This architectural transformation is driven by a complex interplay of cost, latency, and stringent data compliance requirements. Centralized AI systems, while offering initial simplicity, rapidly created massive challenges, primarily the “Expense Explosion” from exponential usage and the “Latency Labyrinth” that prevented real, time applications from performing optimally. Applications requiring sub, second response times, such as real, time video analytics or predictive text, cannot tolerate the delays inherent in cloud, roundtrips.

Decentralization, particularly through techniques like Federated Learning (FL), fundamentally reduces legal risk by solving the “Data Distress” problem. FL ensures that raw, sensitive inputs, such as health information or Gboard typing patterns, remain localized on the user’s device. Only the aggregated model updates, which are significantly less sensitive than the raw data, are shared. This adherence to data minimization principles directly aligns with requirements imposed by global regulations like GDPR and HIPAA. The principle is straightforward: if raw data is never centralized, the organization avoids the extensive regulatory burden and severe financial risk associated with mass data centralization and subsequent data breaches. This means that Privacy Enhancing Technologies (PETs) like Local Differential Privacy (LDP) are not just academic tools but critical compliance and cost, mitigation strategies.

Major technology leaders recognized this necessity early. Apple, for instance, pioneered the adoption of Local Differential Privacy (LDP) starting with iOS 10 and macOS Sierra. This mechanism allows Apple to crowd, learn valuable aggregate insights, such as popular photo scenes or trending words, without the personally identifiable information ever leaving the device. This move to on, device processing, leveraging specialized Neural Processing Units (NPUs), defines the next phase of AI, ensuring that features like real, time translation or updated suggestions are both high, performance and intrinsically private.

2. Differential Privacy: The Core Mathematical Guarantee

Differential Privacy (DP) is the most widely adopted mathematical framework for quantifying and guaranteeing privacy loss in statistical data release. The core promise of DP is powerful: an observer seeing the final statistical output cannot tell whether a specific individual’s data was included in the computation, limiting the leakage of private information. This guarantee is achieved by adding carefully calibrated noise to the output of statistical computations.

The privacy guarantee is quantified by two parameters, epsilon (epsilon) and delta (delta), defining left (epsilon, delta right), DP. Epsilon (epsilon) controls the degree of randomness added, representing the maximum multiplicative change in output probability caused by altering or removing one user’s record from the dataset. Smaller values of epsilon indicate stronger privacy protection but inherently result in higher utility loss, meaning the resulting statistics or model may be less accurate.

Delta (delta) is typically a very small, non, zero probability (e.g., 10^{-5}) that the strong epsilon guarantee will fail. Modern machine learning relies on approximate left (epsilon, delta right), DP because pure DP (delta=0) is often too mathematically restrictive for complex computations, especially those involving continuous data or high, dimensional vectors. This necessity of using approximate DP reveals a critical nuance: real, world Differential Privacy in complex ML models inherently accepts a tiny, non, zero risk of privacy breach (delta). The engineering commitment is to ensure this risk remains negligible.

Noise calibration is essential, achieved by injecting additive noise, either Laplace or Gaussian, calibrated according to the function’s global sensitivity and the desired epsilon and delta budget. While the Laplace mechanism offers superior accuracy for releasing simple, single, dimensional statistics, the Gaussian mechanism is necessary for the high, dimensional gradient vectors used in deep learning, providing the required left (epsilon, delta right), DP for algorithms like DP-SGD.

Part II: Implementation Tutorial: Differential Privacy (DP-SGD)

Differential Privacy Stochastic Gradient Descent (DP-SGD) is the current industry standard algorithm for training private deep learning models. It requires adapting standard optimizers to incorporate controlled per, sample clipping and noise injection.

3. Preparing for Private Training: The DP-SGD Pipeline

The successful implementation of DP-SGD requires integrating three specific mechanisms into the standard gradient descent process: per, sample gradient clipping, Gaussian noise injection calibrated by a noise multiplier (sigma), and rigorous tracking of the cumulative privacy loss, or budget (epsilon).

Prerequisites and Safety: The implementation requires a Python 3.8+ environment and the installation of specialized libraries such as TensorFlow Privacy or PyTorch Opacus. Practitioners must be aware that the introduction of calibrated noise inevitably leads to utility degradation (a drop in model accuracy). It is mandatory engineering practice to always benchmark the final private model against its non, private baseline to understand the privacy, utility trade, off.

Success hinges on meticulously tuning the three core hyperparameters:

- L2 Clipping Norm (C): This mathematically bounds the maximum magnitude of each individual gradient contribution. This step ensures that a single, unusual data point cannot disproportionately influence the model updates.

- Noise Multiplier (sigma): This value controls the scale of the Gaussian noise added to the clipped gradients. A higher multiplier means more noise and thus stronger privacy (lower epsilon).

- Microbatches/Batch Size: This defines the granularity at which per, sample gradients are computed. Privacy is highest when the microbatch size is 1, but efficiency often dictates using slightly larger microbatches.

4. How To: Implementing DP-SGD with TensorFlow Privacy

TensorFlow Privacy simplifies the application of DP by providing wrappers for standard Keras optimizers, automating the clipping and noise injection process.

Detailed Steps: DP-SGD in TensorFlow/Keras

- Installation:

Action: Install the necessary library using the standard package manager command:

pip install tensorflow-privacy. - Model Definition:

Action: Define the standard Keras sequential or functional model architecture suitable for the task.

- Set Hyperparameters:

Action: Define the training and privacy parameters. For example:

l2_norm_clip = 1.0(The boundary for clipping individual gradients.)noise_multiplier = 1.1(A common starting point for strong privacy.)num_microbatches = 1(Maximal privacy, minimum efficiency.)target_epsilon = 8.0(The desired maximum privacy budget for the entire training run.)

- Wrap Optimizer:

Action: Instantiate the custom differentially private optimizer. The standard optimizer (e.g., Adam) must be wrapped using the library’s specialized class:

from tensorflow_privacy.keras.optimizers import DPKerasAdamOptimizer optimizer = DPKerasAdamOptimizer( l2_norm_clip=l2_norm_clip, noise_multiplier=noise_multiplier, num_microbatches=num_microbatches, learning_rate=0.001 ) - Compile and Train:

Action: The Keras model is compiled using the wrapped optimizer and trained normally using

model.fit(). - Verification: Calculating Achieved epsilon:

Verify: After training is complete, the cumulative privacy loss must be calculated. The library provides a tool that uses the RDP accountant method to calculate the final achieved epsilon based on the total number of steps, data size, and noise multiplier. This calculation is non, optional, it serves as the necessary “proof of work” to validate the privacy guarantee provided to users and regulators.

5. How To: Implementing DP-SGD with PyTorch Opacus

Opacus is the preferred library for applying DP-SGD to PyTorch models, offering a streamlined PrivacyEngine API that simplifies complex transformations.

Detailed Steps: DP-SGD in PyTorch/Opacus

- Installation:

Action: Install the Opacus library:

pip install opacus. - Prepare Model and Components:

Action: Define the standard PyTorch

nn.Modulemodel, theOptimizer(e.g., SGD or Adam), and theDataLoader. - Validate Model Structure:

Action: Opacus requires models to support efficient per, sample gradient computation, which some standard layers may not. Developers should use the built, in validators to check for compliance before starting:

from opacus.validators import ModuleValidator. - Instantiate Privacy Engine:

Action: Define the privacy parameters, including the noise multiplier, the maximum gradient norm (clipping norm), and the target delta (delta):

from opacus import PrivacyEngine # Recommended delta: typically the inverse of the training set size privacy_engine = PrivacyEngine( model, sample_rate=batch_size / len(data_loader.dataset), alphas=[...], # For RDP calculation noise_multiplier=1.0, max_grad_norm=1.0, # Equivalent to L2 clipping norm target_delta=1e-5 ) - Wrap Components:

Action: Use the

attachmethod to transparently inject privacy hooks into the training components:model, optimizer, data_loader = privacy_engine.attach( model, optimizer, data_loader ) - Training Loop:

Action: The standard PyTorch training loop proceeds. Opacus automatically performs the gradient clipping and noise addition processes during the

backward()call without requiring manual modification of the training logic.- Verification: After training, the final achieved epsilon is queried from the engine:

final_epsilon = privacy_engine.get_epsilon(target_delta=1e-5).

- Verification: After training, the final achieved epsilon is queried from the engine:

The true engineering challenge of DP-SGD centers on correctly selecting the L2 clipping norm (C). If C is set too low, most gradients are drastically reduced, resulting in vanishing gradients, hampered learning, and model underfitting. Conversely, if C is too high, it fails to bound the influence of outliers, rendering the DP mechanism ineffective. Therefore, the implementer must execute initial non, private training runs solely to profile the C gradient norm distribution (e.g., finding the 80th or 90th percentile) and set C accordingly. This profiling step, often omitted in high, level guides, is an essential component of a successful, utility, preserving DP implementation.

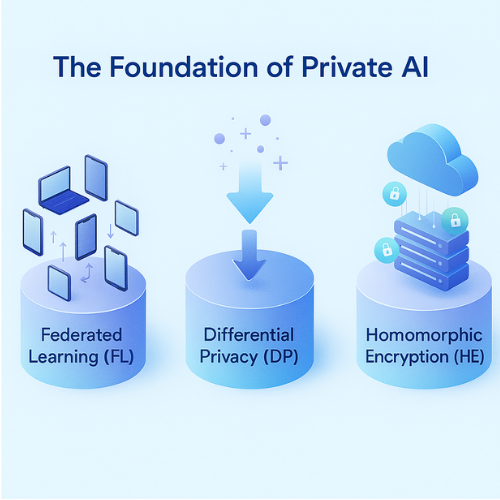

Part III: Cryptographic Architectures (HE and SMPC)

While Differential Privacy manages training data aggregates statistically, cryptographic Privacy Enhancing Technologies (PETs) secure data during computation (inference) with mathematical certainty, preventing decryption by unauthorized parties.

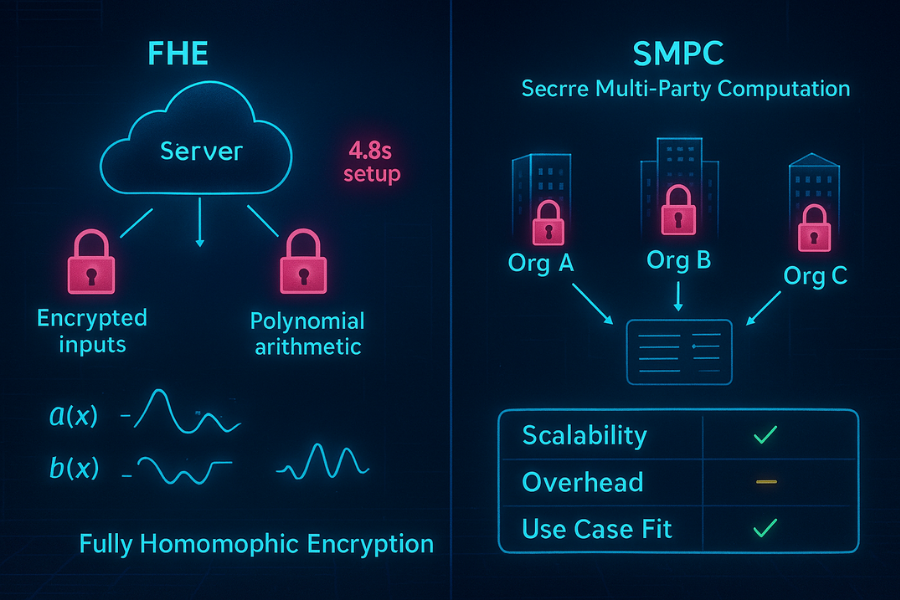

6. Homomorphic Encryption (HE): Inference on Ciphertext

Homomorphic Encryption (HE) allows a third party, such as a cloud provider, to perform arbitrary mathematical operations on encrypted data without ever needing the decryption key or seeing the plaintext. This capability offers the maximum possible privacy for inference, as, a, service, crucial for extremely sensitive inputs like biometric data or proprietary financial algorithms.

The complexity of the required operation dictates the type of HE scheme used:

- Fully Homomorphic Encryption (FHE): Capable of performing unlimited additions and multiplications, allowing for the computation of any function on encrypted data. It is the most powerful but the most computationally demanding.

- Leveled Homomorphic Encryption (LHE): Supports addition and multiplication but allows only a limited number of operations (cryptographic depth) before the ciphertext becomes too noisy or large to handle.

- SHE (Shift, accumulation HE): Specialized HE schemes for deep learning, such as those that use binary, operation, friendly encryption, convert complex non, linear functions (like ReLU activations or Max Pooling) into simpler, cheaper operations like shifts and additions. This dramatically reduces the critical multiplicative depth overhead, enabling deeper network architectures.

The primary roadblock for the widespread deployment of HE has historically been performance. Recent studies highlight a specific latency reality: for popular schemes like CKKS, based FHE, the initial setup, key generation and data encoding, is the dominant runtime factor, typically consuming around 4.8 seconds. In contrast, the computation of each subsequent neural network layer adds a negligible cost, around 0.02 seconds. This pattern indicates that HE is best suited for complex, deep network tasks where the high front, loaded setup cost can be amortized across multiple layers or inferences.

Accelerating HE performance has become a major focus. Optimized software techniques, such as memory, efficient data packing schemes, have shown performance improvements of 29\times and energy consumption reductions of 30\times over prior software baselines. Furthermore, hardware acceleration is proving transformational. Compilers like Google’s CROSS can convert HE primitives into AI operators and leverage existing ASIC AI accelerators (like TPUs) to achieve significant speedups, up to 14\times compared to previous many, core CPU work.

Proof of Work: Settings Snapshot (HE Acceleration)

| Metric | Value | Implication for Deployment |

| Initial Setup Time (CKKS) | sim 4.8 seconds | This high latency must be amortized. FHE is poorly suited for stateless, single, query interactions. |

| Layer Computation Cost | sim 0.02 seconds/layer | Once initialized, deeper networks add minimal incremental latency. |

| Software Optimization Gain | 29\times performance improvement | Software redesign and optimized data representation are critical precursors to hardware acceleration. |

| Hardware Acceleration Gain (TPU) | Up to 14\times speedup | HE is transitioning from pure research into production, scale, accelerated cloud infrastructure. |

7. Secure Multi-Party Computation (SMPC): Collaborative Secrecy

Secure Multi-Party Computation (SMPC) protocols allow distinct organizations to jointly compute the output of a mathematical function (e.g., a loss function during training or a complex analytical query) while mathematically guaranteeing that their individual input data remains secret.

The mechanism often relies on secret sharing, where sensitive inputs or encryption keys are cryptographically split into shares and distributed among the computing parties. This ensures that no single party, including a malicious observer, possesses enough information to reconstruct the original data. This approach is inherently strong and is not vulnerable to computationally powerful adversaries.

SMPC is often less computationally complex and expensive than Fully Homomorphic Encryption, making it highly valuable in highly regulated sectors like finance and healthcare where data sharing is restricted due to proprietary or privacy concerns, but collaborative analysis is required (e.g., secure fraud detection across multiple banks or joint medical research across hospitals).

The deployment split between HE and SMPC is dictated by the architectural model. HE is ideal for client, server inference (secure outsourcing), while SMPC is best suited for peer-to-peer collaboration (secure joint analysis). The critical challenge for SMPC is its scalability limitation. It requires complex, iterative communications and protocols between all nodes involved in the computation. This high communication overhead severely limits its applicability to large, scale, decentralized systems like Federated Learning environments, often confining practical SMPC deployments to scenarios involving only a “handful of clients” due to the difficulty of scaling complex cryptographic communication protocols.

Part IV: The Engineering Decision Matrix

8. PET Selection: Balancing Privacy, Utility, and Performance

Expert architects understand that no single Privacy Enhancing Technology solves all privacy challenges. A layered strategy is essential, where the optimal technique is selected based on the required privacy guarantee, the system’s scalability demands, and tolerance for computational overhead.

The fundamental trade-off is between mathematical certainty and practical performance: DP provides statistical, highly scalable, and efficient protection, but trades privacy strength (epsilon) for utility (accuracy). Cryptographic PETs (HE/SMPC) offer stronger, mathematically certain security, but introduce extreme computational or communication overhead.

PETs Use-Case Chooser

| Use Case Scenario | Recommended PET | Reasoning/Justification | Scalability/Overhead Profile |

| Large, Scale On, Device Analytics (e.g., trending word discovery) | Differential Privacy (LDP) | Mandatory for compliance and massive scale. Low computational footprint on device. | High scalability, utility loss is accepted for high, volume aggregate statistics. |

| Sensitive Collaborative Research (e.g., aggregated clinical trials) | Secure Multi, Party Computation (SMPC) | Requires high accuracy and cryptographic certainty for joint research without revealing individual inputs. | Low scalability (small number of nodes), high communication overhead required. |

| Client, side Biometric Inference on Cloud Platform | Fully Homomorphic Encryption (FHE) | Client data must remain encrypted throughout cloud execution. High privacy demand justifies the setup latency. | Medium/High client, server scalability, extreme computational latency. |

| Preventing Gradient Reconstruction in Federated Learning | Differential Privacy (DP-SGD) | Layered on top of FL to provide mathematical protection against data leakage from shared model updates. | High scalability, low to moderate computational overhead. |

| Hybrid Secure Data Exploration among Small Consortia | Hybrid: LDP + SMPC | Uses LDP for efficient pre, processing of large, raw datasets, then employs SMPC for highly sensitive analysis steps. | Moderate scalability, balances efficiency and security needs. |

9. Proof of Work: Performance Benchmarking

A holistic performance comparison must acknowledge that DP manages training risk, while HE and SMPC primarily manage inference risk or collaborative training. The common denominator is the overhead penalty relative to non, private plaintext computation.

Bench Table: Comparative Performance Overhead

| Metric | Differential Privacy (DP-SGD) | Secure Multi, Party Computation (SMPC) | Homomorphic Encryption (HE) |

| Data State Protected | Data in rest (model updates, gradients) | Data in use (computation on inputs) | Data in use (computation on ciphertext) |

| Primary Overhead Source | Per, sample gradient computation and noise injection | Communication complexity between computing nodes | Cryptographic key manipulation and polynomial arithmetic |

| Performance Penalty | Low to Moderate (Minor percentage increase in training time) | High (Scalability is severely limited by network latency and communication) | Extreme (Orders of magnitude slower, though rapidly accelerating) |

| Trust Model | Trust required in the data curator (central server) to correctly apply and account for noise. | Trust required that a threshold of parties (t) do not collude to reveal secrets. | Trust required only in the underlying cryptographic primitive, no trust needed in the computing server. |

For an AI/ML Researcher, DP-SGD currently offers the most robust platform for large, scale research because it is highly scalable and provides quantifiable risk metrics (epsilon) necessary for publication and regulatory adherence. The current technical focus for this persona must be maximizing utility under a fixed, competitive privacy budget.

For a Small to Mid, sized Business (SMB) Admin handling highly sensitive proprietary data (e.g., customer biometric authentication), if budget allows, the rapidly improving performance of accelerated HE (leveraging TPUs or GPUs) makes it an increasingly viable choice for client, server inference. This eliminates the necessity of trusting the service provider with decryption keys. The extremely high front, loaded latency of FHE setup (sim 4.8 seconds) dictates that HE deployments must prioritize long, running sessions or massive batch processing to amortize this initial latency, moving away from traditional stateless, low, latency API calls.

Part V: Troubleshooting and Ethical Deployment

10. Advanced Troubleshooting and Failure States

Even mathematically robust PETs are susceptible to failure in deployment, typically due to implementation errors related to parameter tuning, cumulative use (composition), and layer incompatibility.

Troubleshoot Skeleton: Symptom to Fix Table

| Symptom | Root Cause | Non, Destructive Test | Fix/Mitigation Strategy |

Privacy budget exhausted for query... |

Composition Error: Sequential operations (queries, retraining cycles) have caused the cumulative epsilon to exceed the defined limit. | Check the privacy budget accountant tool to trace the precise sequence of operations that led to budget exhaustion. | Implement a mandatory monthly privacy budget refresh. Consult stakeholders to raise the epsilon limit, or strictly enforce query rate limits. |

| Model Accuracy Plummets (Fails to Converge) | Miscalibrated Clipping Norm (C): C is set too low, truncating essential high, magnitude gradient information necessary for model convergence. | Disable DP and run a few epochs of non, private training solely to log the mean and high, percentile gradient norms. | Increase C to match the 80th, 90th percentile of the observed gradient norm distribution for the model architecture. |

| Unexpected Training Instability or Gradient Errors | Non, DP, Compatible Layer: The model contains layers that break the per, sample gradient computation requirement (e.g., some custom layers or specific batch norms). | Use Opacus’s ModuleValidator or similar tooling to check all model components for DP compliance. |

Swap out non, compliant layers or manually implement custom backward passes that accurately isolate per, sample gradients. |

| SMPC Fails to Complete Computation/Times Out | Communication Overhead: Network latency or the number of collaborating nodes exceeds the feasible limit for the complex cryptographic communication protocols. | Run a synthetic load test profiling inter, node communication bandwidth usage and latency requirements. | Reduce the number of collaborating parties, upgrade network infrastructure, or switch to an efficient DP, based aggregation if cryptographic certainty is not mandatory. |

The most common and dangerous failure state is the Composition Error, which represents the invisible, cumulative failure of DP guarantees over time. The second ranked cause is managing Utility Loss, or the inability to find the optimal trade, off between sufficient noise addition and maintaining usable model accuracy. The third major challenge is the Lack of Trust and Transparency resulting from the inability to effectively communicate the mathematical guarantees of DP (epsilon) to users and regulators.

11. Legal and Ethical Considerations

Differential Privacy is highly valued in regulated environments because it intrinsically supports “Privacy by Design” principles. It directly aids organizations in complying with the UK General Data Protection Regulation (UK GDPR), particularly principles surrounding data minimization and maintaining appropriate security against unauthorized processing. Deploying DP correctly acts as a major risk mitigation tool for data controllers.

However, the field of private AI introduces a complex ethical conflict: the Privacy, Fairness Paradox. When enforcing algorithmic fairness, such as equalized odds, in a model trained on biased data, the process can disproportionately alter the influence of data points from unprivileged subgroups. This can ironically increase the privacy risk, specifically membership inference leakage, for the very subgroups the fairness measure was intended to protect. Therefore, applying standard global DP (uniform noise injection) is insufficient when fairness is a concern. Expert engineers must track the privacy loss (epsilon) specific to sensitive demographic groups and adjust the noise calibration per group to maintain parity in both fairness and privacy guarantees, preventing the model from disproportionately exposing minority data.

The legal effectiveness of DP ultimately relies heavily on communication. Engineers must translate the mathematical abstraction of epsilon into human, readable risk statements to build stakeholder and user trust. Merely stating epsilon is meaningless to a regulator or general user. Effective transparency requires demonstrating, for example, that “due to the noise added, an attacker’s belief that your specific data was used can only increase by a quantified factor of e^\epsilon, a low and mathematically controlled risk.”

12. Frequently Asked Questions (FAQs)

1. How is Differential Privacy different from traditional anonymization

DP offers a mathematically rigorous, quantifiable guarantee against re, identification, even when attackers utilize rich auxiliary datasets. Traditional anonymization techniques are brittle and easily compromised by modern data linking attacks.

2. What is the practical impact of the epsilon privacy budget value (e.g., epsilon=1 vs. epsilon=10)

An epsilon=1 guarantees that the output probability changes by at most e^1 \approx 2.7\times if an individual’s data is altered. An epsilon=10 allows a massive e^{10} \approx 22,000\times probability shift, severely weakening the privacy guarantee and reducing trust.

3. Why can’t Homomorphic Encryption (HE) be used for training large models like GPT

While FHE inference is advancing, training large models requires an extreme number of high, depth, complex operations (multiplications). The computational overhead for FHE training remains prohibitively high compared to DP-SGD and FL.

4. Is DP-SGD sufficient to protect against all data leakage in Federated Learning

No. DP-SGD protects the aggregated model update from individual data reconstruction. It is often layered with Secure Aggregation (SA), a cryptographic technique that prevents the central server from viewing individual, un, noised user gradients before they are summed up.

5. How do engineers manage the privacy budget exhaustion problem in continuous DP releases

The core strategy is rigorous tracking of cumulative privacy loss (epsilon). Organizations must implement query rate limits and establish a budget refresh schedule (e.g., a mandatory monthly refresh) to manage the composition of privacy loss over time.

6. Does the use of PETs satisfy GDPR automatically

PETs are powerful technological tools that support compliance with principles like data minimization and security, by, design. However, compliance is a holistic legal assessment, PETs must be correctly deployed and supported by robust organizational policies.

7. What is the primary technical limitation of Secure Multi, Party Computation (SMPC)

Scalability and communication overhead. SMPC protocols demand intensive cryptographic communication between all parties, making them impractical for large, scale, decentralized scenarios involving many clients.

8. What is the exact role of the L2 clipping norm in DP-SGD

The L2 clipping norm (C) explicitly bounds the maximum influence that any single training example can exert on the overall model update. This bounding is a mandatory step before noise can be injected effectively.

9. What technical breakthrough is making Homomorphic Encryption viable for inference now

The key breakthrough is the co, design of FHE algorithms with specialized hardware (ASICs/TPUs) and optimized compilers. These advancements drastically reduce latency by utilizing parallel processing for complex cryptographic polynomial operations.

10. Can I use Laplace noise instead of Gaussian noise in DP-SGD

The Gaussian mechanism is required for DP-SGD because it robustly supports the high, dimensional gradient vectors and naturally satisfies the required approximate (epsilon, delta), DP definition. Laplace noise is only recommended for simple, low, dimensional statistical queries.

11. How does Differential Privacy intersect with algorithmic fairness

Achieving strong DP can worsen fairness issues. Uniformly applied noise can drown out the signal of small, minority subgroups in the data, simultaneously reducing model accuracy and increasing the relative privacy risk for those groups.

12. What is the difference between Local DP (LDP) and Central DP

LDP adds noise on the individual device before data transmission (strongest individual privacy, but highest utility loss). Central DP aggregates raw data first, then applies noise to the aggregate result (higher utility, but requires trust in the central data holder).

13. Why is the initial setup time so high for FHE inference

The high front, loaded latency (sim 4.8 seconds) is dominated by necessary cryptographic steps: key generation and the complex encoding of data into the polynomial structures required for HE computation.

14. What frameworks support DP implementation in major ML tools

The current industry standards are TensorFlow Privacy (for Keras/TF) and Opacus (for PyTorch). Both libraries streamline the complex mathematical requirements of the DP-SGD algorithm into manageable software API calls.

15. What is the critical failure mode of pure epsilon, DP (delta=0)

Pure epsilon, DP guarantees that the output probability never changes by more than e^\epsilon. This absolute guarantee is often too strict, meaning pure epsilon, DP mechanisms cannot handle high, dimensional computations like those in deep learning without severely compromising data utility.

13. Conclusion: The Inevitable Hybrid Future of Private AI

The era of centralized, unprotected data models for Artificial Intelligence is functionally over. The operational cost, the technical latency challenges, and the non, negotiable demands of global regulations (GDPR, HIPAA) have fundamentally shifted AI architecture away from mass data collection and toward verifiable, decentralized privacy.

The path to building a trustworthy, production, ready AI system is defined by the strategic deployment of a hybrid Privacy Enhancing Technology (PET) stack, where different techniques are layered to secure the entire data lifecycle:

- Training and Scale: The most efficient solution for large, scale, decentralized training is Federated Learning paired with Differential Privacy Stochastic Gradient Descent (DP-SGD). This stack keeps raw data local while DP statistically shields individual contributions to the model update. The challenge here is precisely calibrating the noise (the epsilon budget) to maximize model utility without compromising the mathematical privacy guarantee.

- Inference and Certainty: For highly sensitive inputs (e.g., biometrics, financial data) requiring cryptographic certainty, Homomorphic Encryption (HE) is rapidly transitioning from research to viability. This shift is powered by specialized hardware acceleration (TPUs/ASICs), which is reducing the historic, extreme latency of HE, making secure, outsourced inference on encrypted data practical for long, running or complex computations.

- The Trade-Off: The engineering decision is no longer whether to use PETs, but which trade-off to accept. DP provides statistical protection and high scalability, whereas HE and Secure Multi, Party Computation (SMPC) provide cryptographic certainty but with a significant performance or communication overhead penalty.

The industry trajectory, driven by the rise of sophisticated on, device foundation models (e.g., Apple Intelligence leveraging integrated NPUs), confirms that privacy is no longer an optional security feature. It is now a core, hardware, supported function that simultaneously addresses critical latency and operational cost challenges. The future of trustworthy AI is intrinsically private and architecturally layered.